Overview of Research

Here are representative publications and descriptions of our ongoing research areas:

Heterophily and Graph Neural Networks [top]

Graph neural networks (GNNs) have become one of the most popular graph deep learning models, achieving state-of-the-art results for semi-supervised classification, in which the goal is to infer the unknown labels of the nodes given partially labeled networks with node features. While many different GNN models have been proposed, most of them perform best in graphs that exhibit the property of homophily, sometimes referred to as the idea that “birds of a feather flock together”, in which linked nodes often belong to the same class or have similar features. However, in the real world, there are also many settings where “opposites attract”, leading to networks that exhibit heterophily, in which linked nodes tend to be from different classes. Many of the most popular GNNs fail to generalize well to networks with heterophily (low homophily). Our group has led groundbreaking efforts in this space, spanning from identifying effective GNN designs and introducing new architectures that can improve performance in heterophilous networks, to theoretically and empirically analyzing connections between heterophily and other well-known challenges of GNNs, including oversmoothing, robustness, performance discrepancies across nodes, and scalability.

-

Heterophily and Graph Neural Networks: Past, Present and Future

Jiong Zhu, Yujun Yan, Mark Heimann, Lingxiao Zhao, Leman Akoglu, Danai Koutra

In IEEE Data Eng. Bull., 2023

[PDF coming soon] -

Unveiling the Impact of Local Homophily on GNN Fairness: In-Depth Analysis and New Benchmarks

Donald Loveland, Danai Koutra

In Under Review, 2024

[PDF coming soon] -

On Performance Discrepancies Across Local Homophily Levels in Graph Neural Networks

Donald Loveland, Jiong Zhu, Mark Heimann, Benjamin Fish, Michael Schaub, Danai Koutra

In LOG(Spotlight Presentation), 2023

[PDF coming soon] -

Two Sides of the Same Coin: Heterophily and Oversmoothing in Graph Convolutional Neural Networks

Yujun Yan, Milad Hashemi, Kevin Swersky, Yaoqing Yang, Danai Koutra

In ICDM, 2022

[PDF coming soon] -

How does Heterophily Impact the Robustness of Graph Neural Networks?: Theoretical Connections and Practical Implications

Jiong Zhu, Junchen Jin, Donald Loveland, Michael Schaub, Danai Koutra

In KDD, 2022

[PDF coming soon] -

Graph Neural Networks with Heterophily

Jiong Zhu, Ryan Rossi, Anup Rao, Tung Mai, Nedim Lipka, Nesreen Ahmed, Danai Koutra

In AAAI, 2022

[PDF coming soon] [Code] -

Beyond Homophily in Graph Neural Networks: Current Limitations and Effective Designs

Jiong Zhu, Junchen Jin, Donald Loveland, Michael Schaub, Danai Koutra

In NeurIPS, 2020

[PDF coming soon] [Code]

Characterizing GNN Behavior through Local Graph Structure [top]

The aim of this work is to deeply understand how a trained GNN’s behavior changes when applied to prediction tasks with varying local graph structure. As many GNN computations are localized, moving beyond analyzing GNNs with respect to a global graph property engenders a variety of critical out-of-distribution problems with implications on performance, fairness, and robustness. In our lab, we focus on (a) characterizing such generalization challenges and (b) offering scalable solutions capable of bolstering a GNN’s ability to sufficiently represent all data points. As many of our applications revolve around social network analysis, recommender systems, and personalization, such improvements provide tangible and real-world benefits via encouraging equitable treatment of all individuals.

-

Unveiling the Impact of Local Homophily on GNN Fairness: In-Depth Analysis and New Benchmarks

Donald Loveland, Danai Koutra

In Under Review, 2024

[PDF coming soon] -

On Performance Discrepancies Across Local Homophily Levels in Graph Neural Networks

Donald Loveland, Jiong Zhu, Mark Heimann, Benjamin Fish, Michael Schaub, Danai Koutra

In LOG(Spotlight Presentation), 2023

[PDF coming soon] -

On the Relationship Between Heterophily and Robustness of Graph Neural Network

Jiong Zhu, Junchen Jin, Donald Loveland, Michael Schaub, Danai Koutra

In KDD, 2022

[PDF coming soon] -

On Graph Neural Network Fairness in the Presence of Heterophilous Neighborhoods

Donald Loveland, Jiong Zhu, Michael Schaub, Mark Heimann, Benjamin Fish, Danai Koutra

In KDD DLG Workshop, 2022

[PDF coming soon]

LLMs + Graphs [top]

Many high-impact applications work with data that contains both structural information, in the form of graph topology, and textual information, in the form of natural language graph or node level attributes. Our recent work proposes strategies for leveraging large language models in conjunction with graph neural networks to build models that effectively and efficiently capture both structural and world-knowledge for more powerful inference.

- Large Language Model Guided Graph Clustering

Puja Trivedi, Nurendra Choudhary, Eddie Huang, Vasileios Ioannidis, Karthik Subbian, Danai Koutra

In Preprint, 2024

[PDF coming soon]

Self-supervised Graph Representation Learning [top]

Self-supervised learning has led to more powerful, transferable and robust models that can be subsequently applied to downstream tasks. Contrastive learning, in particular, has emerged as a powerful paradigm that seeks to separate “positive” and “negative” data views to learn representations. Our recent work has rigorously studied the role of augmentations in graph contrastive learning by (i) demonstrating that popular generic augmentations can destroy task relevant information, (ii) proposing a generalization bound for such generic augmentations and (iiI) creating a synthetic benchmark for evaluating automatic augmentation strategies.

-

Analyzing Data - Centric Properties for conservative Learning on Graphs

Puja Trivedi, Ekdeep Singh Lubana, Mark Heimann, Danai Koutra, Jay Thiagarajan

In Advances in Neural Information Processing Systems(NeurIPS), 2022

[PDF coming soon] [Code] -

Augumentations in Graph Conservative Learning: Current Methodological Flaws and Towards Better Practices

Puja Trivedi, Ekdeep Singh Lubana, Yujun Yan, Yaoqing Yang, Danai Koutra

In ACM The Web Conference(formerly WWW), 2022

[PDF coming soon] [Code]

Uncertainty Quantification for GNNs [top]

As graph neural networks are increasingly deployed in high-risk, decision making scenarios, it becomes even more important for GNNs to be trust-worthy, in addition to performative. Reliable uncertainty quantification ensures that models are, intuitively, aware of what they don’t know. In our recent work, we focus on improving the calibration of GNN-based node and graph classifiers, as well as link-predictors, under distribution shift.

-

Accurate and Scalable Estimation of Epistemic Uncertainty for Graph Neural Networks

Puja Trivedi, Mark Heimann, Rushil Anirudh, Danai Koutra, Jay Thiagarajan

In International Conference on Learning Representations(ICLR), 2024

[PDF coming soon] -

On Estimating Link Prediction Uncertainty using Stochastic Centering

Puja Trivedi, Danai Koutra, Jay Thiagarajan

In Proc. Int. Conf. On Acoustics, Speech and Signal Processing(ICASSP), 2024

[PDF coming soon]

Representation learning [top]

The goal of network representation learning is to automatically learn low-dimensional embeddings of graph structural properties as a principled alternative to heuristic and/or manual feature extraction. These methods, which are often either inspired by deep learning or directly use deep learning, have shown to be extremely effective in many downstream data mining and machine learning tasks. In our lab, we’re developing network representation learning techniques toward goals like node alignment among multiple graphs and role inference in social networks.

-

Deep Transfer Learning for Multi-source Entity Linkage via Domain Adaptation

Di Jin, Bunyamin Sisman, Hao Wei, Luna Xin Dong, Danai Koutra

In Proceedings of the VLDB Endowment (VLDB), September 2021

[PDF] -

On Generalizing Static Node Embedding to Dynamic Settings

Di Jin, Ryan Rossi, Sungchul Kim, Danai Koutra

In The Fifteenth International Conference on Web Search and Data Mining (WSDM), October 2021

[PDF] [Code] -

A Deep Dive Into Understanding The Random Walk-Based Temporal Graph Learning

Nishil Talati, Di Jin, Haojie Ye, Ajay Brahmakshatriya, Saman Amarasinghe, Trevor Mudge, Danai Koutra, Ronald Dreslinski

In The the 2021 IEEE International Symposium on Workload Characterization (IISWC), November 2021

[PDF] [Code] -

Toward Activity Discovery in the Personal Web

Tara Safavi, Adam Fourney, Robert Sim, Marcin Juraszek, Shane Williams, Ned Friend, Danai Koutra, Paul N Bennett

In ACM International Conference on Web Search and Data Mining (WSDM), February 2020

[PDF] -

Distribution of Node Embeddings as Multiresolution Features for Graphs

Mark Heimann, Tara Safavi, Danai Koutra

In IEEE International Conference on Data Mining (ICDM), November 2019

[PDF] [Code]

Best Student Paper award -

node2bits: Compact Time- and Attribute-aware Node Representations for User Stitching

Di Jin, Mark Heimann, Ryan Rossi, Danai Koutra

In Proceedings of the ECML/PKDD European Conference on Principles and Practice of Knowledge Discovery in Databases, September 2019

[PDF] [Code] -

When to Remember Where You Came from: Node Representation Learning in Higher-order Networks

Caleb Belth, Fahad Kamran, Donna Tjandra, Danai Koutra

In Proceedings of the 2019 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), August 2019

[PDF] -

Latent Network Summarization: Bridging Network Embedding and Summarization

Di Jin, Ryan Rossi, Eunyee Koh, Sungchul Kim, Anup Rao, Danai Koutra

In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), August 2019

[PDF] [Code] -

Smart Roles: Inferring Professional Roles in Email Networks

Di Jin*, Mark Heimann*, Tara Safavi, Mengdi Wang, Wei Lee, Lindsay Snider, Danai Koutra

In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), August 2019

[PDF] [Code] -

GroupINN: Grouping-based Interpretable Neural Network for Classification of Limited, Noisy Brain Data

Yujun Yan, Jiong Zhu, Marlena Duda, Eric Solarz, Chandra Sripada, Danai Koutra

In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), August 2019

[PDF] [Code] -

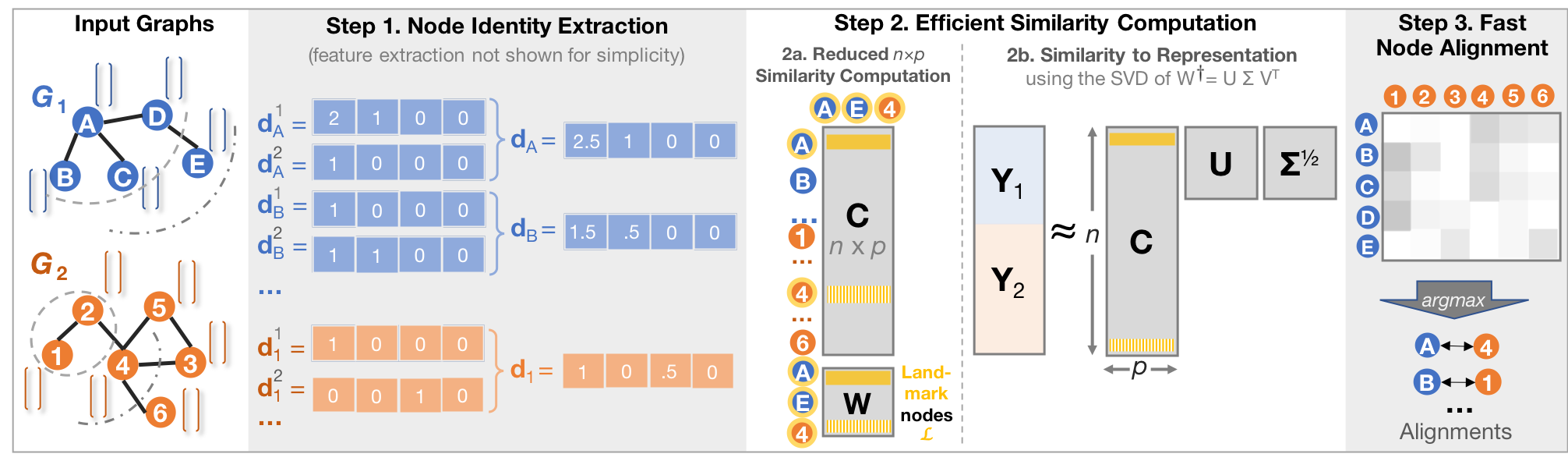

REGAL: Representation Learning-based Graph Alignment

Mark Heimann, Haoming Shen, Tara Safavi, Danai Koutra

In ACM Conference on Information and Knowledge Management (CIKM), October 2018

[PDF] [Code]

Multi-network analysis [top]

Many important problems in the natural and social sciences involve not one but multiple networks, like protein-protein network alignment and user re-identification across social networks. Under this umbrella, we’re studying the problems of node alignment to identify corresponding entities across networks and graph similarity to quantify the similarity between two or more graphs.

-

Refining Network Alignment to Improve Matched Neighborhood Consistency

Mark Heimann, Xiyuan Chen, Fatemeh Vahedian, Danai Koutra

In Proceedings of the 2021 SIAM International Conference on Data Mining (SDM), April 2021

[PDF] [Code] -

Distribution of Node Embeddings as Multiresolution Features for Graphs

Mark Heimann, Tara Safavi, Danai Koutra

In IEEE International Conference on Data Mining (ICDM), November 2019

[PDF] [Code]

Best Student Paper award -

REGAL: Representation Learning-based Graph Alignment

Mark Heimann, Haoming Shen, Tara Safavi, Danai Koutra

In ACM Conference on Information and Knowledge Management (CIKM), October 2018

[PDF] [Code] -

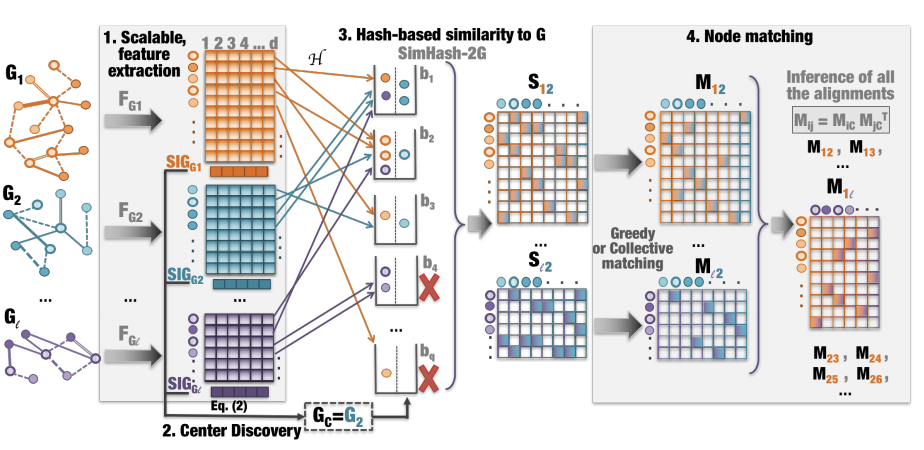

HashAlign: Hash-based Alignment of Multiple Graphs

Mark Heimann, Wei Lee, Shengjie Pan, Kuan-Yu Chen, Danai Koutra

In Proceedings of the 22nd Pacific-Asia Conference on Knowledge Discovery and Data Mining (PAKDD), April 2018

[PDF] [Code] -

BIG-ALIGN: Fast Bipartite Graph Alignment

Danai Koutra, Hanghang Tong, David Lubensky

In IEEE 13th International Conference on Data Mining (ICDM), December 2013

[PDF] -

Network similarity via multiple social theories

Michele Berlingerio, Danai Koutra, Tina Eliassi-Rad, Christos Faloutsos

In Advances in Social Networks Analysis and Mining 2013 (ASONAM), August 2013

[PDF] -

DeltaCon: A Principled Massive-Graph Similarity Function

Danai Koutra, Joshua Vogelstein, Christos Faloutsos

In Proceedings of the 13th SIAM International Conference on Data Mining (SDM), May 2013

[PDF]

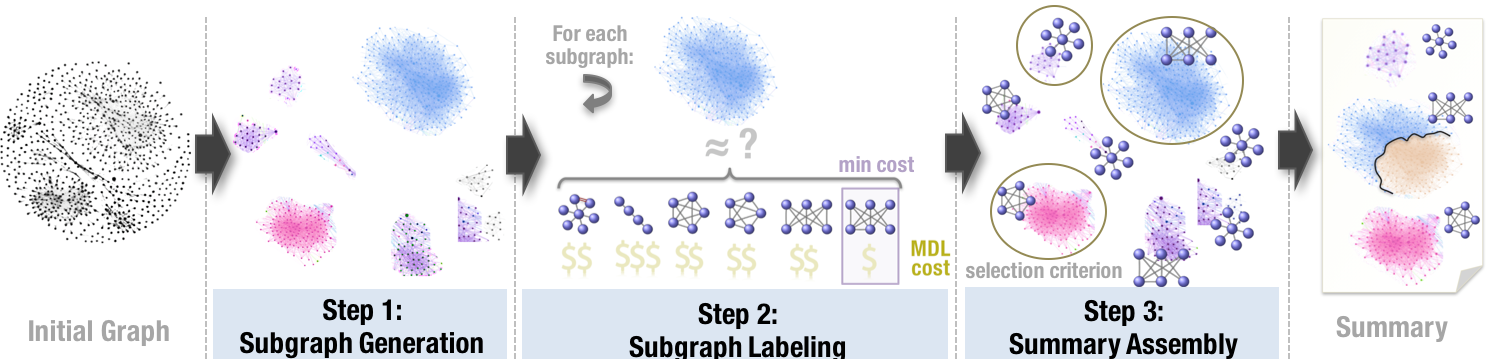

Graph summarization [top]

While recent advances in computing resources have made processing enormous amounts of data possible, human ability to quickly identify patterns in such data has not scaled accordingly. Computational methods for condensing and simplifying data are thus a crucial part of the data-driven decision making process. Similar to text summarization, which shortens a body of text while retaining meaning and important information, the goal of graph summarization is to create a smaller, abstracted version of a large graph by describing it with its most “important” or “interesting” structures.

-

Mining Persistent Activity in Continually Evolving Networks

Caleb Belth, Carol Xinyi Zheng, Danai Koutra

In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), May 2020

[PDF] [Code] -

What is Normal, What is Strange, and What is Missing in a Knowledge Graph: Unified Characterization via Inductive Summarization

Caleb Belth, Carol Xinyi Zheng, Jilles Vreeken, Danai Koutra

In The WEB Conference, February 2020

[PDF] [Code] -

Personalized Knowledge Graph Summarization: From the Cloud to Your Pocket

Tara Safavi, Caleb Belth, Lukas Faber, Davide Mottin, Emmanuel Müller, Danai Koutra

In IEEE International Conference on Data Mining (ICDM), November 2019

[PDF] [Code] -

Latent Network Summarization: Bridging Network Embedding and Summarization

Di Jin, Ryan Rossi, Eunyee Koh, Sungchul Kim, Anup Rao, Danai Koutra

In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), August 2019

[PDF] [Code] -

Graph Summarization Methods and Applications: A Survey

Yike Liu, Tara Safavi, Abhilash Dighe, Danai Koutra

In ACM Computing Surveys (CSUR), June 2018

[PDF] -

Reducing large graphs to small supergraphs: a unified approach

Yike Liu, Tara Safavi, Neil Shah, Danai Koutra

In Social Network Analysis and Mining (SNAM), February 2018

[PDF] [Demo] -

Exploratory Analysis of Graph Data by Leveraging Domain Knowledge

Di Jin, Danai Koutra

In IEEE International Conference on Data Mining (ICDM), November 2017

[PDF] [Code] -

TimeCrunch: Interpretable Dynamic Graph Summarization

Neil Shah, Danai Koutra, Tianmin Zou, Brian Gallagher, Christos Faloutsos

In Proceedings of the 21st ACM International Conference on Knowledge Discovery and Data Mining (KDD), August 2015

[PDF] -

VOG: Summarizing and Understanding Large Graphs

Danai Koutra, U Kang, Jilles Vreeken, Christos Faloutsos

In Proceedings of the 2014 SIAM International Conference on Data Mining (SDM), April 2014

[PDF] [Code]

Best paper nominee

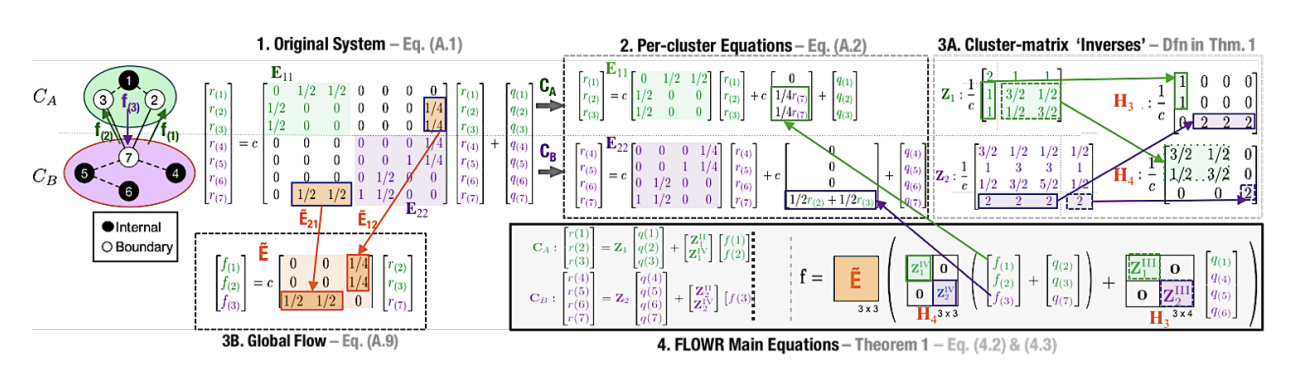

Distributed graph methods [top]

Many graph mining tasks involve iteratively solving linear systems: for example, classifying entities in a network setting with limited supervision and finding similar nodes. As data volumes grow, faster methods for solving linear systems are becoming more and more important. We work on speeding up such methods for large graphs in both sequential and distributed environments, exploring tradeoffs between computational complexity and accuracy for both static and dynamic graphs.

-

Fast Flow-based Random Walk with Restart in a Multi-query Setting

Yujun Yan, Mark Heimann, Di Jin, Danai Koutra

In Proceedings of the 2018 SIAM International Conference on Data Mining (SDM), February 2018

[PDF] [Code] -

Linearized and Single-pass Belief Propagation

Wolfgang Gatterbaur, Stephan Gunnemann, Danai Koutra, Christos Faloutsos

In Proceedings of the VLDB Endowment (VLDB), January 2015

[PDF] -

Unifying Guilt-by-Association Approaches: Theorems and Fast Algorithms

Danai Koutra, Tai-You Ke, U Kang, Duen Horng (Polo) Chau, Hsing-Kuo Kenneth Pao, Christos Faloutsos

In Joint European Conference on Machine Learning and Knowledge Discovery in Databases (ECML/PKDD), September 2011

[PDF]

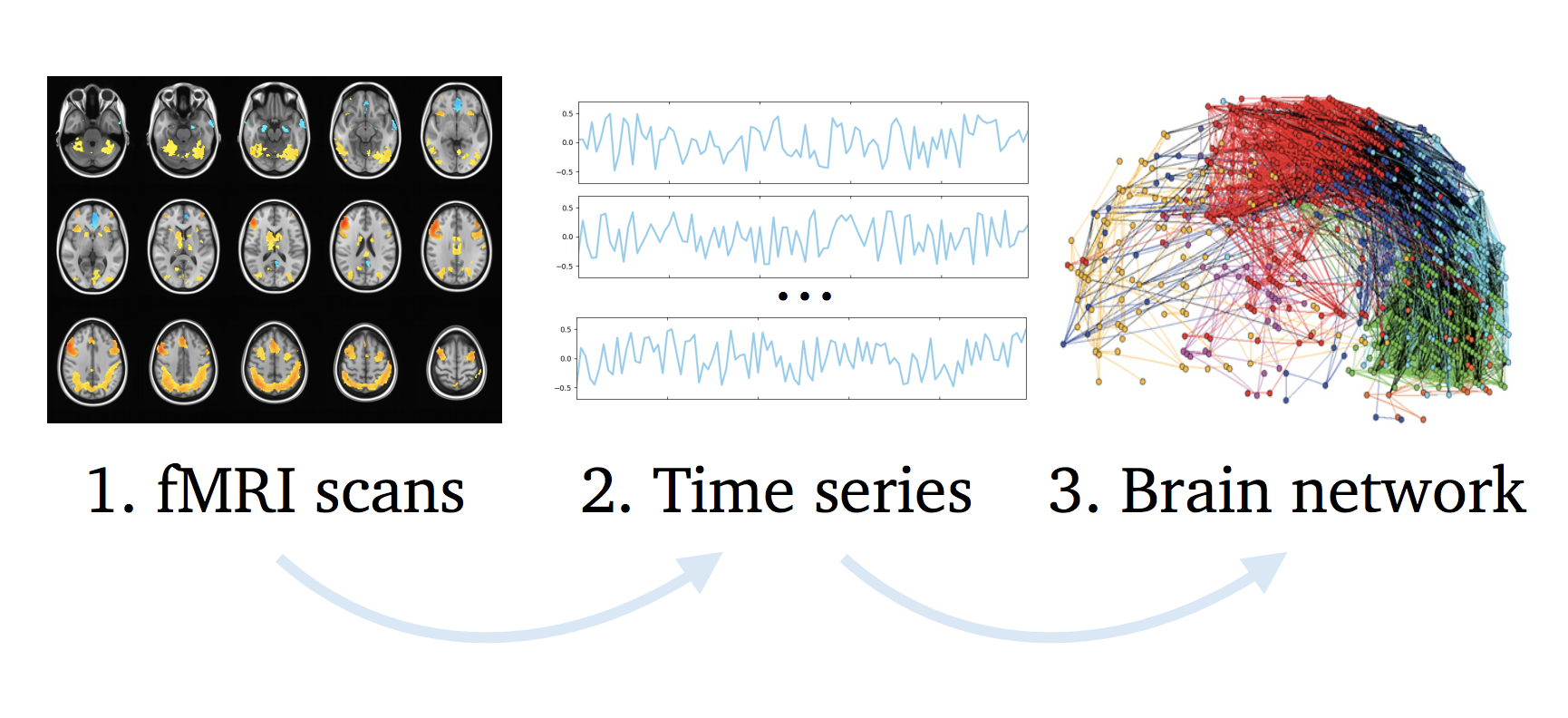

Brain network analysis [top]

Advances in brain imaging machinery have led to the generation of brain maps that describe the brain’s network organization. Analyzing these networks may be key to understanding a variety of brain processes, like maturation, aging, and disease. In our lab, we focus on efficient network-theoretical methods to aid neuroscience practitioners in tasks like abnormality detection and network inference.

-

GroupINN: Grouping-based Interpretable Neural Network for Classification of Limited, Noisy Brain Data

Yujun Yan, Jiong Zhu, Marlena Duda, Eric Solarz, Chandra Sripada, Danai Koutra

In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), August 2019

[PDF] [Code] -

Fast Network Discovery on Sequence Data via Time-Aware Hashing

Tara Safavi, Chandra Sripada, Danai Koutra

In Knowledge and Information Systems (KAIS), August 2018

[PDF]

Invited from ICDM 2017 -

Scalable Hashing-based Network Discovery

Tara Safavi, Chandra Sripada, Danai Koutra

In IEEE International Conference on Data Mining (ICDM), November 2017

[PDF] [Code]

Best paper nominee -

DeltaCon: A Principled Massive-Graph Similarity Function

Danai Koutra, Joshua Vogelstein, Christos Faloutsos

In Proceedings of the 13th SIAM International Conference on Data Mining (SDM), May 2013

[PDF]

User modeling [top]

Massive amounts of available online user information–for example, in social networks, online marketplaces, and streaming music and video services–have made possible the analysis and understanding of user behavior over time at a very large scale. In this area, we’re exploring network-theoretical approaches to user modeling for several different applications, like product and service design, news consumption, and career trajectories.

-

A Deep Dive Into Understanding The Random Walk-Based Temporal Graph Learning

Nishil Talati, Di Jin, Haojie Ye, Ajay Brahmakshatriya, Saman Amarasinghe, Trevor Mudge, Danai Koutra, Ronald Dreslinski

In The the 2021 IEEE International Symposium on Workload Characterization (IISWC), November 2021

[PDF] [Code] -

Smart Roles: Inferring Professional Roles in Email Networks

Di Jin*, Mark Heimann*, Tara Safavi, Mengdi Wang, Wei Lee, Lindsay Snider, Danai Koutra

In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), August 2019

[PDF] [Code] -

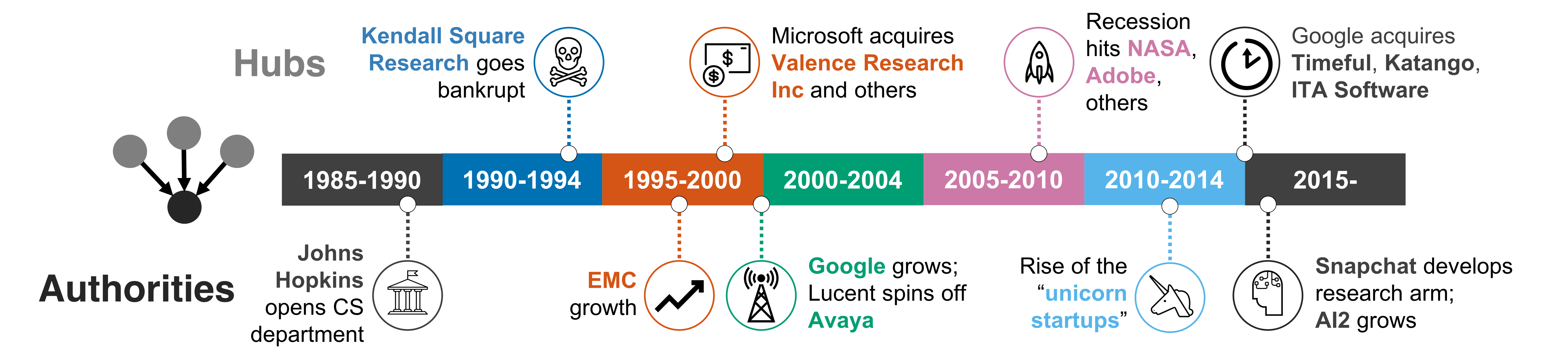

Career Transitions and Trajectories: A Case Study in Computing

Tara Safavi, Maryam Davoodi, Danai Koutra

In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), August 2018

[PDF] [Data] -

PNP: Fast Path Ensemble Method for Movie Design

Danai Koutra, Abhilash Dighe, Smriti Bhagat, Udi Weinsberg, Stratis Ioannidis, Christos Faloutsos, Jean Bolot

In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), August 2017

[PDF] -

If Walls Could Talk: Patterns and Anomalies in Facebook Wallposts

Pravallika Devineni, Danai Koutra, Michalis Faloutsos, Christsos Faloutsos

In Proceedings of the 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2015 (ASONAM), August 2015

[PDF] -

Events and Controversies: Influences of a Shocking News Event on Information Seeking

Danai Koutra, Paul Bennett, Eric Horvitz

In 24th International World Wide Web Conference (WWW), May 2015

[PDF]

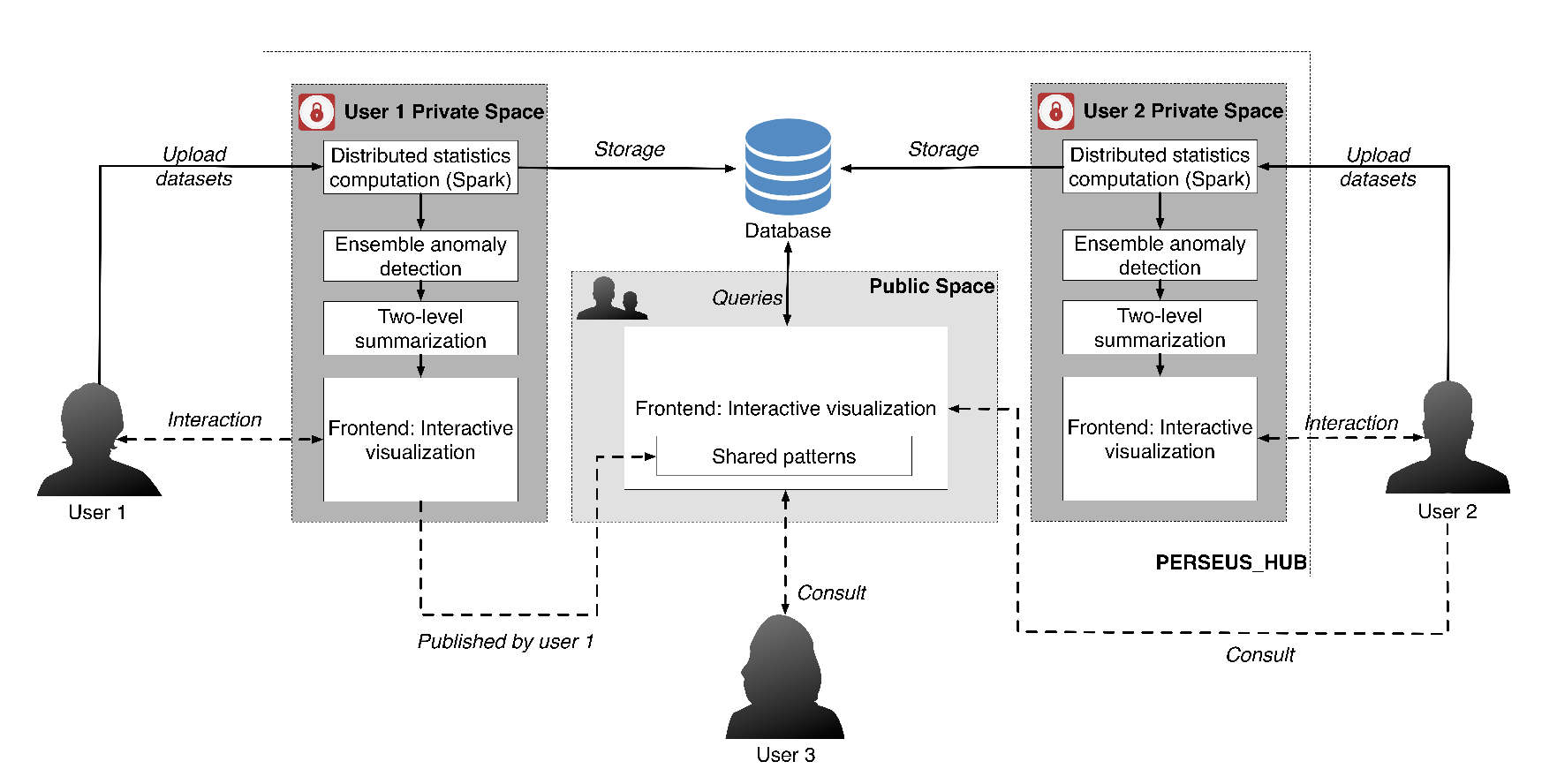

Interactive graph analytics [top]

The initial steps of data exploration often include visual and statistical analysis, but exploration can be time-consuming (and even unrealistic) for large-scale graphs. To help users explore their graphs, we focus on two directions. The first is visualization-based platforms that allow users to interact with graph data without being overwhelmed. The second is efficient exploratory methods for reducing the dimensionality of graph data.

-

Exploratory Analysis of Graph Data by Leveraging Domain Knowledge

Di Jin, Danai Koutra

In IEEE International Conference on Data Mining (ICDM), November 2017

[PDF] [Code] -

PERSEUS-HUB: Interactive and Collective Exploration of Large-Scale Graphs

Di Jin, Aristotelis Leventidis, Haoming Shen, Ruowang Zhang, Junyue Wu, Danai Koutra

In Informatics, June 2017

[PDF] [Code] -

PERSEUS3: Visualizing and Interactively Mining Large-Scale Graphs

Di Jin, Ticha Sethapakdi, Danai Koutra, Christos Faloutsos

In SIGKDD Mining and Learning with Graphs Workshop (KDD MLG), August 2016

[PDF] -

Perseus: an interactive large-scale graph mining and visualization tool

Danai Koutra, Di Jin, Yuanchi Ning, Christos Faloutsos

In Proceedings of the VLDB Endowment (VLDB), September 2015

[PDF] -

Graph-based Anomaly Detection and Description: A Survey

Leman Akoglu, Hanghang Tong, Danai Koutra

In Data Mining and Knowledge Discovery (DAMI), 2014

[PDF] -

OPAvion: Mining and Visualization in Large Graphs

Leman Akoglu, Duen Horng Chau, U Kang, Danai Koutra, Christos Faloutsos

In Proceedings of the 2012 ACM SIGMOD International Conference on Management of Data (SIGMOD), 2012

[PDF]

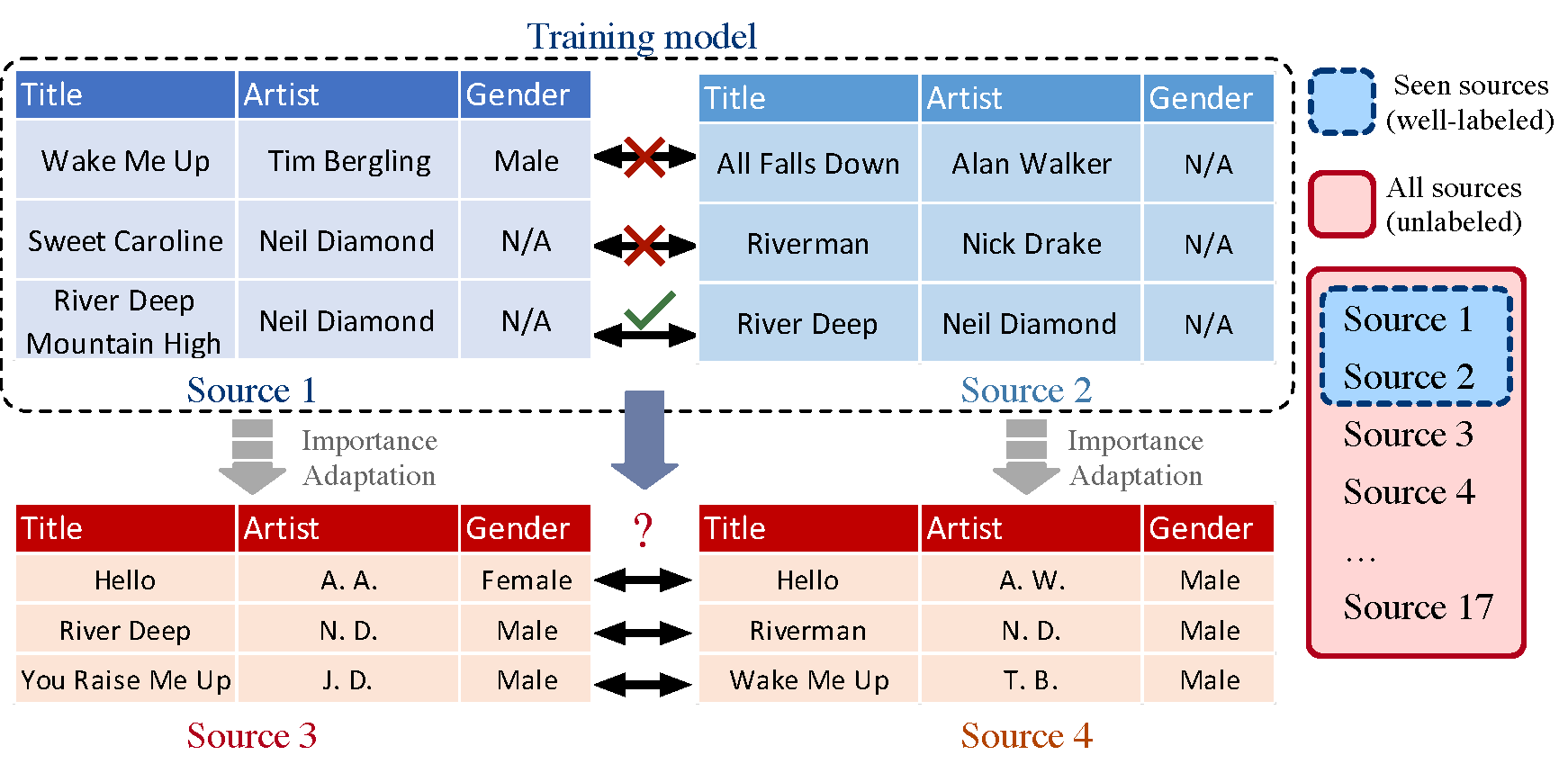

Transfer learning [top]

Transfer learning (TL) is a research problem in machine learning (ML) that focuses on gaining knowledge learned from one problem defined on a particular domain and applying it to a different but related problem from the same or different domain. The specific transferable knowledge that bridges the source and target domain has significant impact to model performance.

- Deep Transfer Learning for Multi-source Entity Linkage via Domain Adaptation

Di Jin, Bunyamin Sisman, Hao Wei, Luna Xin Dong, Danai Koutra

In Proceedings of the VLDB Endowment (VLDB), September 2021

[PDF]